Technology News

Stay up to date on the latest in intelligent building solutions, and innovations from Paige Datacom Solutions.

Right Sizing Your Pathways—From Tray to Conduit

The blog post describes the requirements and recommendations for the sizing of pathways for communications cabling, as specified by industry codes and standards such as the National Electric Code (NEC) and the TIA-569 standard. The post explains that proper sizing of pathways is crucial to the performance and future-proofing of a cable plant and covers the 2...

Latest News

There Is a Hole in the Boat: Why Access Control Professionals Need to Move From Wiegand to OSDP

Essential Cable Planning for Healthcare Facilities

But what about Coax?

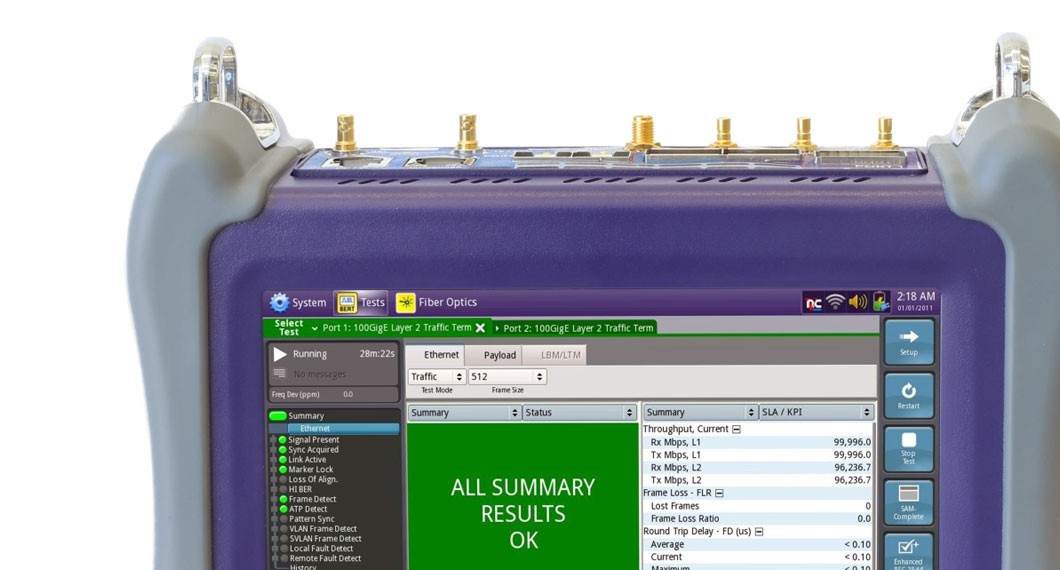

Testing RFC 2544 and GameChanger

Lengthonomics at 1006' with no repeaters. Yes, really!

Lenghtonomics

State of the Data Center - Tides are Shifting

Are Fabrics/Unified Computing the Solution for Rapid Deployment and ease?

Loading...

Loading...